Semantic Search

Sometimes when you’re searching documents you aren’t interested in an exact match. If you’re investigating financial dealings across 70,000 emails, you don’t just want to ctrl+f and search for “money” – you’re interested in “currency” and “finances” and “debt” and everything else with the same feeling as “money.”

Searching using similarity that’s not an exact word-for-word match is called semantic search. It’s been around for a while, but it’s gotten much much much more popular with the rise of large language models!

Semantic search also goes far beyond just one-word synonyms like the example above.For example. XXXXX

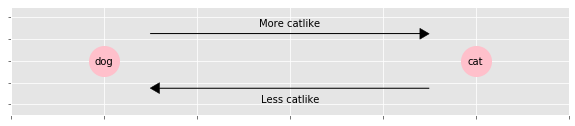

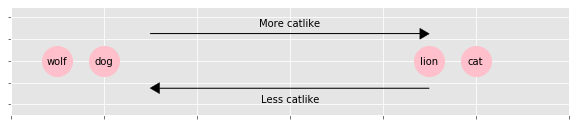

Embeddings

Any time someone explains semantic search they’re legally obligated to go very very very deep into a technical topic called embeddings. We’ll take a look at embeddings briefly so we have a better understanding of limitations when we’re using semantic search.

Semantic search

Semantic search is a (mostly) three-step process:

- Create embeddings for all of your documents

- Create an embedding for your search string

- Find all of the documents that are similar to your search string

“Documents” is vague here, it can be any practically any piece of text – a word, a sentence, a paragraph, an email, etc. In the example below, we’ll use

Gotchas

Types of matches

Different embeddings

Across languages

Most embedding models just look at English, unless they note otherwise – for example, uer/sbert-base-chinese-nli is a model that’s Chinese-only. But what happens if your data is in a mix of languages? No worries, embeddings can work just as well… as long as you select a multilingual model!

For a real-life use case, here’s a great writeup by Jeremy Merrill about the process for analyzing a 356-gigabytes leak that included documents in both English and Portuguese:

For instance, searching for sentences similar to “establishing a new corporation” found these sentence fragments as the top two matches:

- “nova entidade para a sociedade,” Portuguese for “a new entity for the company” in an email discussing creating a new corporate structure.

- “of the firm as newly constituted,” from a law authorizing secretive corporate structures in Mauritius.

You can see an example comparing a multilingual model to an English-only model below. Feel free to adjust the sentences if you’d like to test it out across other languages!

The model we’re using provides a list of 50+ languages it knows – how well it works for any given pair, though, I can’t quite say!